In the Computational Science and Engineering (CSE) Division this means applying for grants, writing software, running simulations, etc.. During these extraordinary times, computational scientist Dawn Geatches highlights a few of the recent (mostly) non-coronavirus related achievements of staff within CSE.

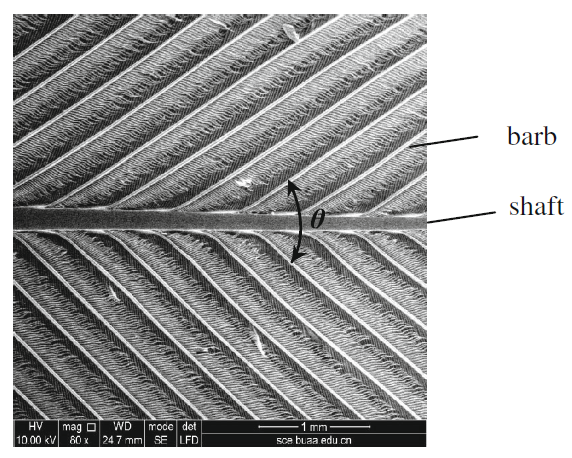

The Computational Engineering Group was recently successful with a proposal from Jian Fang for compute time under PRACE (Partnership for Advanced Computing in Europe). Involving Prof Shan Zhong at the University of Manchester the project 'FlowCDR -Flow control using convergent-divergent riblets, a type of bio-inspired micro-scale surface patterns' takes its inspiration from nature. The grooves and ridges found in bird feathers and along the bodies of adult sharks (see Figure 1) appear to reduce friction-drag with the surrounding fluid. Being able to design and engineer this property will have important industrial applications, and the award of 60 million core hours on a German supercomputer is an important step on this 'groovy' path.

Figure 1: Microscopic structure of bird flight feathers (left, Chen et al. 2014) and divergent riblet pattern upstream of pores of the lateral line of an adult blue shark. (right, Koeltzsch et al. 2002)

Exascale high performance computing (HPC) looms on the horizon and brings opportunities and challenges. Processing a quintillion* (1018) calculations per second, exascale HPC tantalises with the potential for more realistic simulations of chemical and physical actions spanning the breadth of CSE's work. To take advantage of the opportunity, it is essential to overhaul the current simulation software built for peta-scale computing, and if this is impossible, write new software. This will take time to perfect as acknowledged by EPSRC's (Engineering and Physical Sciences Research Council) recent call for Exascale Computing Algorithms and Infrastructures Benefitting UK Research – ExCALIBUR - a £45.7m Strategic Priorities Fund (SPF) programme. CSE submitted several proposals to this call.

The Computational Engineering Group is continuing its track record as a key national and international actor in developing scientific applications for the Exascale era thanks to its contribution to two successful EPSRC grants for the ExCALIBUR High Priority Use Cases: Phase 1 call. The first project is led by Imperial College and will investigate fundamental turbulence flow simulations at the Exascale with applications related to wind energy and green aviation. The second project is jointly led by Cambridge University and UCL with a focus on Exascale Computing for System-Level Engineering: Design, Optimisation and Resilience and the Group is further developing the lattice Boltzmann method for large-scale High Performance Computing (HPC). The Group has also been awarded access to Summit, currently the world's most powerful pre-Exascale facility located at Oak Ridge National Laboratory, through a Director's Discretion Award. The project is led by STFC, in collaboration with Barcelona Supercomputing Centre, to assess the performance of a multi-scale Finite Element method to predict extremely large deformation of composite materials such as aircraft wings.

The Computational Engineering Group is continuing its track record as a key national and international actor in developing scientific applications for the Exascale era thanks to its contribution to two successful EPSRC grants for the ExCALIBUR High Priority Use Cases: Phase 1 call. The first project is led by Imperial College and will investigate fundamental turbulence flow simulations at the Exascale with applications related to wind energy and green aviation. The second project is jointly led by Cambridge University and UCL with a focus on Exascale Computing for System-Level Engineering: Design, Optimisation and Resilience and the Group is further developing the lattice Boltzmann method for large-scale High Performance Computing (HPC). The Group has also been awarded access to Summit, currently the world's most powerful pre-Exascale facility located at Oak Ridge National Laboratory, through a Director's Discretion Award. The project is led by STFC, in collaboration with Barcelona Supercomputing Centre, to assess the performance of a multi-scale Finite Element method to predict extremely large deformation of composite materials such as aircraft wings.

One of the Computational Chemistry Group's (CCG) ExCALIBUR proposals was funded to set-up a Design and Development Working Group (DDWG) for Materials and Molecular Modelling (MMM) at Exascale, and involves CSE scientists Tom Keal, Ian Bush and Alin Elena as well as the project lead, Scott Woodley from University College London and other collaborators from, for example, the EPCC (Edinburgh Parallel Computing Centre) and the Universities of York and Lincoln. The project aims to separate out the fundamental mathematics of the problem from the computer science of implementation. The DDWG will use the methods and libraries that currently work well, identify those being developed across disciplines, and use these to design interdisciplinary exascale methodology, including new workflows to manage and analyse vast volumes of simulation data. Thus, the UK MMM community will be able to use exascale HPC resources efficiently, and address EPSRC Grand Challenges spanning chemistry, physics and engineering.

A second (CCG) proposal just missed top-10 ranking with 11th place, albeit with an excellent project to develop a new class of molecular simulation methods. These address three crucial problems for successful HPC deployment: accuracy and predictability of the molecular model, exascale CPU core count scaling, and inclusion of essential physics for the studied phenomena. The team of high-calibre scientists including Vlad Sokhan, Ilian Todorov, Alin Elena and Ian Bush (Physics Group) proposes the development of a multiscale method i.e. quantum coarse graining for exascale computing. Uniting the accuracy of quantum chemical calculations with the speed and scale of classical coarse graining, this project paves the way for predictive next-generation applications in condensed matter physics, biochemical research, and pharmacology.

Also related to condensed matter the Physics Group has recently had their electronic structure code, Questaal highlighted in the Psi-k newsletter 'Questaal: Electronic Structure for the future'. Questaal addresses the notorious problem – among electronic structure communities – of the many body interactions of electrons within condensed matter, which means it is capable of accurately predicting an intrinsically diverse range of static and dynamical materials phenomena.

Elsewhere in the Physics Group, Ian Bush has been invited to be on the "ARCHER2 training panel" which will approve each annual training programme; address the balance of delivery routes; ensure scientific and technical diversity; and consider course topics, course levels and geographic distribution throughout the Archer2 service.

Image courtesy of ARCHER

The biological nature of the work of the Life Sciences Group seems closer in application to coronavirus matters than that of other groups within CSE, exemplified by their rapid response in taking an instrumental role in setting-up the Global Molcular Modelling and Simulation Efforts Toward COVID19**. This is an online resource which aims to help coordinate efforts to maximise efficiency by bringing together people, projects and resources. CSE's James Gebbie is currently collecting and orchestrating project information, supporting access to HPC, ad-hoc software development support and supporting computing centres around the country with the influx of new users and their software requirements.

*Exascale computing is 1000 times faster than current petascale HPC. To match what an exascale computer system can do in just one second, you'd have to perform one (floating point operation) calculation every second for 31,688,765,000 years. (source: Indiana University)

**For further information about this initiative contact James Gebbie or hecbiosim@soton.ac.uk