The materials science community is a fast-moving one. New tools and techniques are constantly being developed. This is great for the community and allows many specialised problems to be tackled. However, it can be difficult to become aware of all the new (and relevant) developments, much less become an expert in them. The number of tabs open in my browser at any one time is testament to this.

When I see an interesting new method described in a paper, my first instinct is to try it out. I want to see how it works in practice and how it compares to other methods. Exploring these has taken me on some delightful curiosity tangents and has proved extremely useful in my own work. However, this approach can also be an enormous time sink. I often find myself spending hours trying to get a new tool to work, only to find that it either doesn't do what I want it to do, it's not as good as the tool/method I was already using, or, most frequently, that it works great but that I don't actually have any current use for it.

There are many examples of this in my case, from new features in density functional theory codes to new ways to visualise crystal structures to new programming languages. In this post, I'll discuss some of the benefits of early adopting and some of the pitfalls to avoid.

The benefits of early adopting

One of the main reasons to seek out and try shiny new things is that it's fun! This can be a huge motivator, especially when projects are going slowly or you're feeling stuck. After all, being on the cutting edge of knowledge is one of the main reasons we are drawn to academia in the first place.

Being aware of functionality and the 'unique selling points' of various alternatives can also be a huge time saver and improve the quality of your work. I sometimes see people using tools in ways they were never designed for, simply because they are expert users of that tool or are unwilling to shop around for something more suitable. For example, when I was an undergrad, I spent hours in Microsoft Excel trying to calculate positions of Si atoms for a specific surface reconstruction. Because this was the wrong tool for the job (just happened to be what I'd had previous experience with), not only did it take a long time, but it was also hard to generalise, so when I wanted to subsequently modify the structure (e.g. increase the number of Si layers) I had to start from scratch.

Figure 1. A selection of atomistic simulation codes that have interfaces to the Atomic Simulation Environment (ASE). Many do very similar things but each with a unique selling point. The ASE makes it easier to switch between these codes and to take advantage of their strengths.

Aside from tackling specific tasks more efficiently, early adopting can also be a great way to learn new skills and keep up with the latest theoretical developments in the field. Getting your hands dirty with a new tool can be a great way to learn about the method that underlies it and get a better feel for how it works in practice (not always as neatly described in the publication!).

In addition, early adopting can be a great way to get involved in a community and make new connections. By interacting on forums and issue trackers, you can get to know the people behind the tools and learn about their motivations and goals for the project. Bringing your own background and expertise to the table can be a great way to contribute to the project and help it grow.

The benefits of slowing down

While 'hands-on-experience' can indeed be a great way to learn, if the number of concurrent things you're trying to learn is left unchecked, you may only end up with a basic working knowledge of each, rather than any deeper understanding. The working day is finite, after all. This can inhibit your productivity as you're unable to go beyond the tutorial-level. Worse still, you may end up using tools or methods incorrectly. Having only a surface-level understanding can also exacerbate any anxiety caused by imposter syndrome (which is, unfortunately, already ubiquitous in academia).

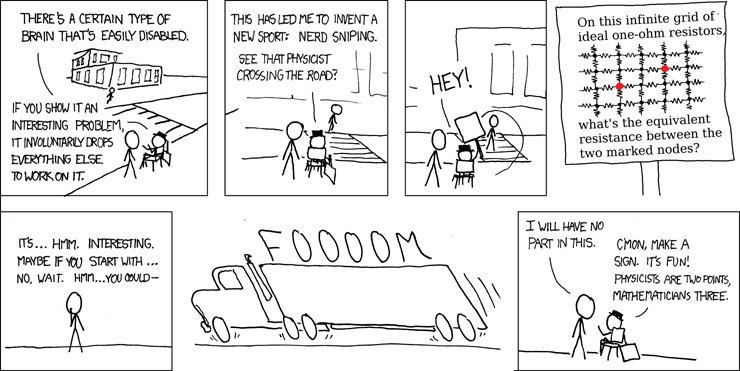

Figure 2. An obligatory XKCD comic on how easy it is to get caught up by new and interesting problems as well as some of the risks associated with that. Source: https://xkcd.com/356/.

Another drawback of chasing the latest shiny tool or method is that many of these are developed for a very specific purpose (e.g. a single publication) and then abandoned. This abandonment could happen for several reasons:

- it can be hard to find the time or funding to generalise beyond a specific research project

- researchers are generally not trained in basic software engineering practices

- or perhaps the tool simply turned out to not be as good as the existing alternatives.

Ultimately most of these reasons boil down to inadequacies in the incentive structure of academia -- a pattern that is slowly changing thanks to the rise of research software engineering (RSE) type roles. In any case, it can be a huge waste of time to invest in a tool that is not going to be maintained or developed further.

Finding the right balance

Saying no to new projects is a difficult skill for anyone to learn. When it comes to new tools and methods, it can be even harder. After all, you're not just saying no to a new project, you're saying no to the possibility of learning something new and improving your work. However, finding the right balance between the benefits of early adopting and the drawbacks of chasing every new thing is essential to being productive and avoiding burnout.

In my experience, the best way to find this balance is to be aware of the benefits and drawbacks of early adopting and to be honest with yourself about your own motivations. If you have a genuine need for new functionality that has just been published, then by all means, go for it! However, if you're just looking for something new to play with, then it's probably best to wait and see if the tool is going to be maintained and developed further. If you're keen to see if the results in the paper are as robust or reproducible as they should be, unfortunately academia generally doesn't reward this kind of work, so you'll need to proceed with caution. Time-management and setting yourself a realistic allocation of time to learn a new tool is very helpful in terms of making those decisions. If you embark on a learning expedition without having a clear idea of how much time you can afford to put into it - you may well end up feeling stressed or guilty.

With the rise in research-software-engineering-type positions and the increased uptake in e.g. software carpentries, the barrier to getting started with new tools and methods is getting smaller. Research software is getting better-written documentation, more robust user interfaces, more stable code, and the use of containers (e.g. Docker) etc. All these make it easier than ever to get started with new tools and methods. However, it's a double-edged sword - lowering the entry barrier can make it easier for some of us to get distracted by the latest shiny thing, so choosing wisely is more important than ever.